By Maya Indira Ganesh, 17 June 2014

The Numbers

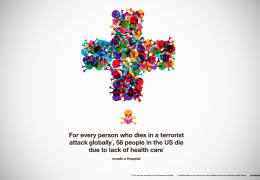

In the 18 months between January 2009 and June 2010, in North Kivu province, eastern Democratic Republic of Congo (DRC), at least 6,334 women were raped by members of the DRC national army and Rwandan FDLR militia group. In South Kivu province the number was close to 7,500. Major General Patrick Cammaert, the former deputy commander of a United Nations Mission in Congo, said that “it is more dangerous to be a woman than a soldier in armed conflict.”

- A study by the United Nations put the figure of sexual violence in the DRC at about 15,000 incidents per year in 2008 and 2009. Using an entirely different methodology, another study found that the number is 26 times higher than the UN estimate.

- A 2011 Economist report looked at the number of women raped in six conflicts, including the 1994 Rwandan Genocide The report put the number of women raped in this conflict at 500,000. The casual reader accepts these numbers as they are, but other studies have estimated these numbers to be anywhere from 250,000 to 500,000.

Sexual violence in conflict is deviled by the lack of credible data; this is because methods to collect this data are inherently flawed, which calls into question what the data says about the scale and nature of the issue. At the same time, sexual violence in conflict as an issue receives high-profile international attention. As a case study, the the social production of data about sexual violence in conflict reveals that data about an issue may be near-impossible to arrive at reliably.

Video: Riziki Shobuto from North Kivu in the Democratic Republic of Congo shares her personal story of gang-rape by armed militia.

Behind the Numbers

In 2009, the columnist Nick Kristof wrote a story in the New York Times about how 75% of Liberian women had been raped as a result of the civil conflict from 1989-1996. A political scientist at Drexel University in the United States, Amelia Hoover-Green, has spent the last few years absorbed by the fact that counting violence on a large scale is inevitably flawed, and tries to find ways to get around these flaws. Describing this incident in a personal interview in December 2013, Hoover-Green says that when she and a collaborator, Dara Kay Cohen, read Kristoff's story they were alarmed at the figure he quoted; they didn't know where it came from and how accurate it was so they set out to find out more about this statistic.

Hoover-Green and Cohen were able to show that the source of this figure, a study by the World Health Organisation, was flawed for the following reasons. The figure - 75% - was extrapolated from a study done in three particular counties in Liberia. There was no mention of surveys based on samples from the whole population, so the figure showed as inflated. Also, survivors' accounts given to the Liberian Truth & Reconciliation Commission put the figure at a considerably lower amount:10-20%. According to Hoover-Green, bad data leads to poor decision-making, the misallocation of resources and the increased likelihood of not being the sort of advocates affected women need. She says of the Liberia case that:

“Kristof, eager to show that rape was an emergency in Liberia, had mangled the evidence, generalizing from a study of sexual assault survivors to all Liberian women. To be clear, rape was absolutely an emergency in Liberia. Depending on which survey you read, 20 to 40 percent of Liberian women report suffering some form of sexualized violence. The problem, beyond simple inaccuracy, is that Kristof's false generalization defined sexualized violence as “the problem” for Liberian women. Like many of our false generalizations about rape, that’s conveniently simple—but in painting women as rape victims, it erases bigger and more complicated stories about war, violence, displacement, and survival.”

Where is the data coming from?

The social production of data refers to how a research or data collection exercise is structured and carried out, and what happens to the data afterward. Annette Markham writes that data is not a thing or even a set of things, but is, rather, a “powerful frame for discourse about knowledge — both where it comes from and how it is derived; privileges certain ways of knowing over others.”

In this case, some may ask, what is there to know? Rape is rape; does the origin-story of the data really matter when the crimes are so horrific?Isn't it is bad enough to know that even one woman's rape went unpunished? Whilst this is true, specific numbers allow for criminals to be tried in courts of law for their crimes, and for legal or other services for victims, prevention, law-enforcement, awareness-raising to be developed in response to the scale of the problem. Accurate data also reveals the actual scale of and patterns in the violence.

Not having close approximates of actual figures – either under- or over-reported – can be problematic. Tia Palermo says that estimates

“may belittle the extent of the problem and fail to induce the international community to act. Alternatively if estimates ...are exaggerated, future scenarios might seem less severe in comparison...international organizations and governments may be less inclined to act or to [will] allocate resources inefficiently.”

Accurate or near-accurate numbers are important. As important is how they're eventually used in campaigns or policy advocacy. The choices made in what to collect, how to collect it and from whom or where determine how we can claim to know the scale or actual extent of a particular issue. The data-based claims made about an issue like sexual violence can sometimes significantly shape the debates around it, which is why knowing how and where the numbers come from is important; especially when there are different numbers emerging on the same issue.

Sampling death in Syria

In some situations, like conflict, it is almost impossible to estimate how many people have actually been affected, and assessments of the death toll in Syria is a good example of this. Reports estimate anywhere from 50,000-100,000 deaths from the conflict that started in 2011. To appreciate the mathematical challenges in accurately counting how many people have died as a result of the atrocities requires a metaphor:

“Imagine that you have two dark rooms, and you want to know how big the rooms are. You can't go into the rooms and measure. They're dark, and you can't see inside them, but what you do have is a bunch of little rubber balls. Throw the balls in one room, and you hear frequent hollow pinging sounds as they bump into each other. Throw them into the next room, and the sound is less frequent. Even though you can't see inside of either room, you can intuit that the latter room is the larger of the two.

This is a quote by Patrick Ball, the human rights data scientist who works with the Human Rights Data Analysis Group in California. One of the reasons why Ball's numbers and methods are so well respected is because he is acutely aware of the 'social production of data'. In January 2013, Ball collaborated on Benetech's preliminary assessment of the death toll in Syria. He says about this:

“We can solve issues of data capture and data management; our bigger problem is sampling the population we need to study in understanding how mass violence works. You cannot claim to see a pattern in the data if your sample is not representative.”

Sampling, or the rationale for choosing a part of a particular population to study, on the basis of which claims will be made about the whole population, is one of the first and most fundamental issues that data collection initiatives have to contend with. Getting a representative sample, however, is often near impossible without significant resources; as a result, a lot of research can be challenged on the basis that it is not possible to effectively infer things about the entire population from the research sample.

In the case of sexual violence, things get more complicated because statistics about sexual violence in conflict is “rare and elusive” as Hoover-Green puts it, meaning that it is not evenly distributed in a population, so a randomly selected sample is unlikely to reflect actual patterns. Moreover, unlike the verifiable, objective reality of death, sexual violence isn't defined in one way only and there is always the problem of underreporting.

Hoover-Green, Ball's one-time colleague, discovered that “basic questions regarding the nature, magnitude, pattern, variation, perpetrators and effects of wartime sexual violence cannot yet be answered with scientific rigor. Stakeholders must also grapple with the fact that no single dataset, no matter how large, can accurately assess conflict-related sexual violence on a population level.”

Hoover-Green has also published a set of guidelines for the measurement of sexual violence in conflict; these guidelines offer tips on how to make inferences based on incomplete evidence and takes examples of messy qualitative reports and shows how to work through verifying the information in them. These guidelines were developed based on her work in Colombia a case in point about how statistics on sexual violence in conflict are inadequate, and that reliable, national-level statistics are near-impossible.

Does it matter?

In an interview about her organisation's study of violence against marginalised women in South Asia, Geetanjali Misra, the founder-director of Crea, a women's rights organisation in Delhi, says that data is “part-truth, part-lie”. What Misra refers to is the [un]certainty with which we believe that data must reveal truth. What if this is a false truth or if the hopes we have invested in data to tackle social issues with dark histories of power are false? If claims cannot be made with any rigour, as Amelia Hoover-Green says then how does data work in advocacy?

Hoover Green is interested in what this means for the long-term health of human rights organisations working with data for advocacy. Inaccurate claims about sexual violence heighten the tension between “advocacy organizations' needs for short-term drama and long-term credibility.” If your data is flawed and if as an organization you continue to use flawed data, what does this mean for how credible your work is? The perception of human rights activists using any data they can get to make their case persists, and this doesn't do anything for the credibility of the people human rights activists are trying to represent.

The challenge this presents to advocacy groups is to know what they're using the data for and who they are speaking to. To a legal team prosecuting rapists and militia leaders for tens of thousands of rape in conflict at the International Criminal Court absolutely need accurate or near-accurate data. To a policymaker, similarly, data that isn't rigorously collected or representative can lead to decision-making that may not have a solid rationale. To the enthusiastic and sincere hackers for change, data may serve a different purpose: to bring diverse technical and advocacy communities together around the issue by playing around with data-sets. According to Ball, reliable, or near-reliable, statistics come from data as interpreted through a model. Merely 'counting cases' as data collection does not make for a robust model.

'Pulling out the storylines in data' may be a familiar phrase to anyone working in data journalism or capacity building on data for advocacy. The social production of data is in itself a storyline in and about data worth telling: it may reveal more than the data does.

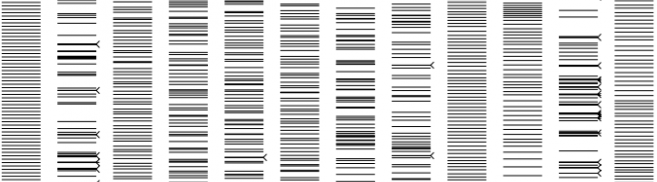

Images and video credits (in order of appearance):

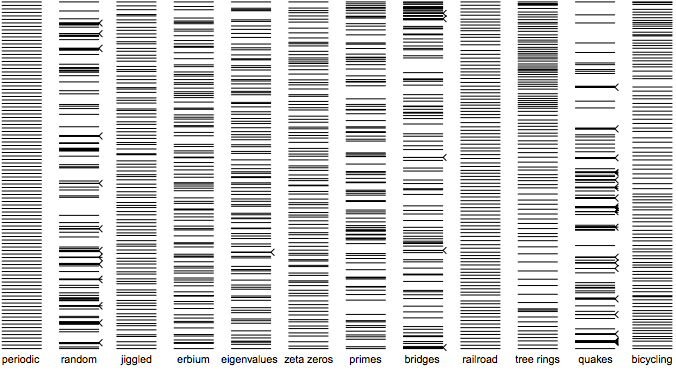

- Header image. This image is taken from a paper called 'the Spectrum of Riemannium' by Brian Hayes and reprinted from the American Scientist (Vol 91, No 4, 2003). Associated with the unsolved and obscure mathematical challenge, the Riemann Zeta Hypothesis, this paper is about how things distribute themselves in time and space. These lines represent the distribution of things as diverse as growth rings on a fir tree in California from 1884-1993 to the lengths of 100 consecutive bike rides. Thanks to @fadesingh for sharing.

- Video: Riziki Shobuto from North Kivu in the Democratic Republic of Congo shares her personal story of gang-rape by armed militia.

Special thanks to Patrick Ball, Amelia Hoover-Green and Geetanjali Misra, and to Anusha Hariharan for research assistance.